The Boomerang Mood Swing That Facebook Caused

Facebook has found itself in hot water after it was revealed they conducted an experiment on nearly 700,000 users in 2012. In this experiment, Facebook manipulated these users’ news feeds, so only specific positive or negative posts were shown to specific users, to elicit specific emotional responses. The experiment was conducted by Facebook, as well as with Cornell University and the University of California, and it was government sponsored.

Facebook has found itself in hot water after it was revealed they conducted an experiment on nearly 700,000 users in 2012. In this experiment, Facebook manipulated these users’ news feeds, so only specific positive or negative posts were shown to specific users, to elicit specific emotional responses. The experiment was conducted by Facebook, as well as with Cornell University and the University of California, and it was government sponsored.

Even though the experiment was conducted two years ago, the experiment is making news now as the results have just been published in the PNAS (Proceedings of the National Academy of Sciences) Journal.

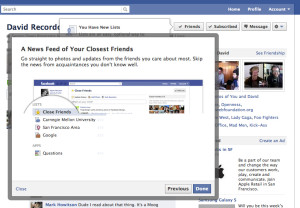

The authors of the experiment’s results stated that “Facebook already filters a user’s news feed content via a ranking algorithm to show them content that would interest them the most.” They also stated that with this experiment, the same algorithm filtered content based on how emotional it was. The content being at least one word being identified as either positive or negative.

The authors also stated that the results were–“for people who had positive content reduced in their news feed, a larger percentage of words in people’s status updates were negative and a smaller percentage were positive. When negativity was reduced, the opposite pattern occurred. These results suggest that the emotions expressed by friends, via online social networks, influence our moods, constituting, to our knowledge, the first experimental evidence for massive-scale emotional contagion via social networks.” The authors also stated that these posts effect whether a user posted positive and negative things in response, in order to keep up certain appearances to their friends.

The authors also stated that the results were–“for people who had positive content reduced in their news feed, a larger percentage of words in people’s status updates were negative and a smaller percentage were positive. When negativity was reduced, the opposite pattern occurred. These results suggest that the emotions expressed by friends, via online social networks, influence our moods, constituting, to our knowledge, the first experimental evidence for massive-scale emotional contagion via social networks.” The authors also stated that these posts effect whether a user posted positive and negative things in response, in order to keep up certain appearances to their friends.

In other words, these results are obvious. If we read negative posts, we are most likely going to write negative posts ourselves. If we read positive posts, we are most likely going to write positive posts ourselves and what our friends post affect our moods and what we post. For example, some single users are going to be jealous, when a friend changes their relationship status from single to being in a relationship/engaged/married. While some users may be jealous of a friend’s new baby or job. So to “remedy” this, if you will, users will brag about the latest adventures or great things in their lives, in order to seem happy or even to compete with their friends’ happiness. I know I have done some of these things in the past and I’m sure many other people have. Although not everyone reacts the same way.

In other words, these results are obvious. If we read negative posts, we are most likely going to write negative posts ourselves. If we read positive posts, we are most likely going to write positive posts ourselves and what our friends post affect our moods and what we post. For example, some single users are going to be jealous, when a friend changes their relationship status from single to being in a relationship/engaged/married. While some users may be jealous of a friend’s new baby or job. So to “remedy” this, if you will, users will brag about the latest adventures or great things in their lives, in order to seem happy or even to compete with their friends’ happiness. I know I have done some of these things in the past and I’m sure many other people have. Although not everyone reacts the same way.

The main criticism that has come from this experiment was that Facebook was manipulating users’ moods and what the possible consequences of that could be. This criticism has come from experts in the field and users themselves. When the news of the experiment emerged users described it as creepy, evil, scary, among other things. However the criticism also comes from a much bigger issue of the fact, that Facebook may have broken many laws by conducting the experiment without the affected users’ consent. The PNAS Journal itself seems to have regretted how the experiment was conducted. Whether Facebook have broken any laws remains to be decided. However there is probably going to be some loophole in the terms and conditions of signing up to Facebook, to prevent any serious charges.

Facebook itself have said that the reason they conducted the experiment was because they care about the emotional impact they have. They also wanted to investigate what they call “the common worry” of users posting positive content and their friends feeling left out. However they seem to be showing their ulterior motive, when they state that they also worry about exposure to negative posts affecting users so greatly that they might give up on Facebook altogether.

![]() I personally don’t understand why there was a need for this experiment, the results were always going to be obvious. Of course everyone who uses Facebook is going to be affected by what they see, both positive and negative. However assuming what users are going to feel is walking into dangerous territory. Everybody reacts differently to what they see and read, that’s all part of being human, but that doesn’t mean you should alter “Facebook reality” to try and please or aggravate them. Some of us need to feel what we need to feel and some of us need to develop thicker skins. There are always going to be people who have better and/or worse lives than us. This is the reality of life, altering what people see online doesn’t change that.

I personally don’t understand why there was a need for this experiment, the results were always going to be obvious. Of course everyone who uses Facebook is going to be affected by what they see, both positive and negative. However assuming what users are going to feel is walking into dangerous territory. Everybody reacts differently to what they see and read, that’s all part of being human, but that doesn’t mean you should alter “Facebook reality” to try and please or aggravate them. Some of us need to feel what we need to feel and some of us need to develop thicker skins. There are always going to be people who have better and/or worse lives than us. This is the reality of life, altering what people see online doesn’t change that.

If there is a loophole in its terms and conditions that make gaining this information legal, it doesn’t mean that Facebook should have used it. Even if Facebook conducted this experiment out of genuine care for its users, they seemed to have abandoned that care for their own gain in its methods. Legal doesn’t always mean ethical.

The authors of the experiment’s results also stated that “their results are primarily significant for public health purposes because small individual effects can end up with large social consequences”. Considering the amount of backlash this experiment and its results have seen, I find this statement amusing in its irony.

What do you think of Facebook’s “mood swing” experiment? Feel free to comment below.